Facial Expression Analysis and Auditory Perception

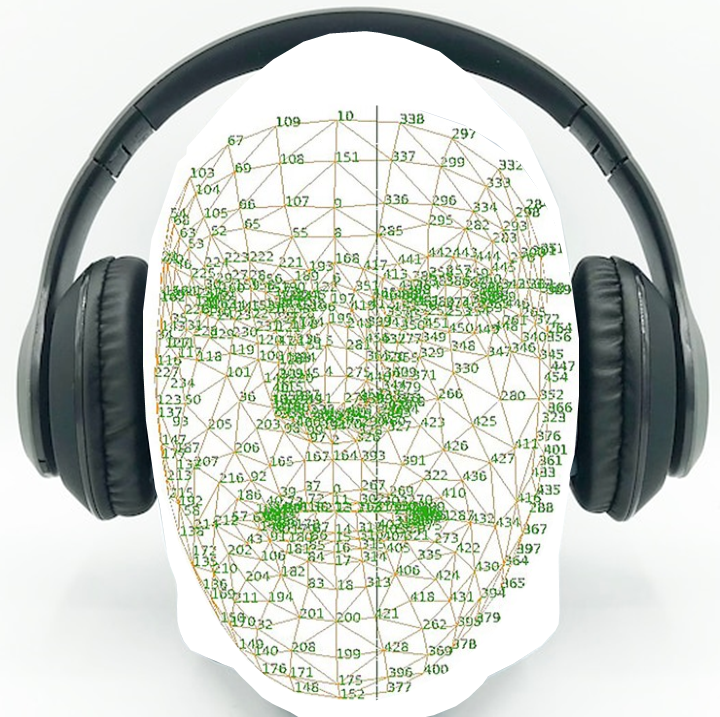

The aim of this project is to identify, characterize and link various subtle facial expressions to the sensory and affective dimensions of loudness. This effort is based on insights derived from pain research. Facial expression analysis of perceived pain established a range of facial expressions that correlate to either the sensory or affective dimensions of pain. The affective dimension (how unpleasant is the pain) elicits a different range of facial expressions than the sensory dimension (how much pain there is). Sound perception is also characterized by such dimensions: how un/pleasant the sound is, and how loud it is. Interestingly, the perception of pain and sound can overlap for instance for loud sounds and in auditory disorders such as hyperacusis. It is yet to be demonstrated whether these dimensions correlate to distinct sets of facial expressions, and whether it is possible to decode the perceived features of sound from facial movements. In order to address this knowledge gap we are analyzing reactions to auditory stimuli of different valence and intensity in a large hybrid video+behavior dataset. Data analysis involves the use of computational linguistics, video and facial expression analysis using data-driven approaches, and machine learning techniques. This project is an important stepping stone in the development of methods to evaluate subjective perception in a subject independent manner. Such developments are fundamental to address the issues in replicability of self-report based studies. The immediate clinical and industrial applications include the calibration of hearing aids, reactive systems for sound feature control, and the evaluation of perceived auditory environment in non-verbal subjects. This last application has life-changing potential for people affected by hearing disorders in concurrence with other debilitating conditions that complicate the accomodation of their needs.