Research Projects

Speech production in Challenging Listening Conditions: Investigating the Effects of Noise, Ear Occlusion, and Hearing Impairement

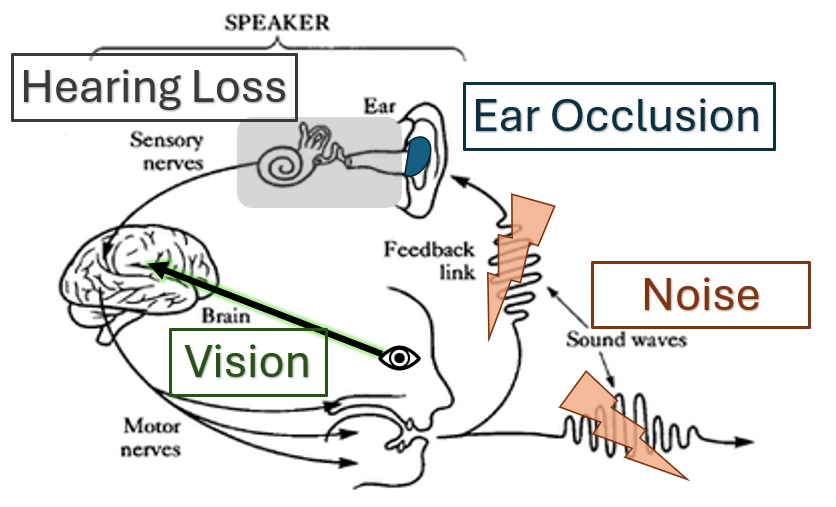

When we speak, hearing ourselves in our acoustic environment calibrates our speech production. My research explores this process in various contexts. For example, in noisy settings, we automatically raise our voices, a response known as the Lombard effect. However, wearing hearing protection in these environments alters our voice perception and speech production. My first project examines how ear occlusion and noise impact speech by analyzing acoustic features from a speech database. My second project investigates the effect of hearing loss on speech production in different noise levels. Lastly, my final project studies how vision and room acoustics together influence speech by having participants speak in various virtual and auditory environments.

Student: Xinyi Zhang

Longitudinal data collection from patients of Alzheimer's disease using a hearable

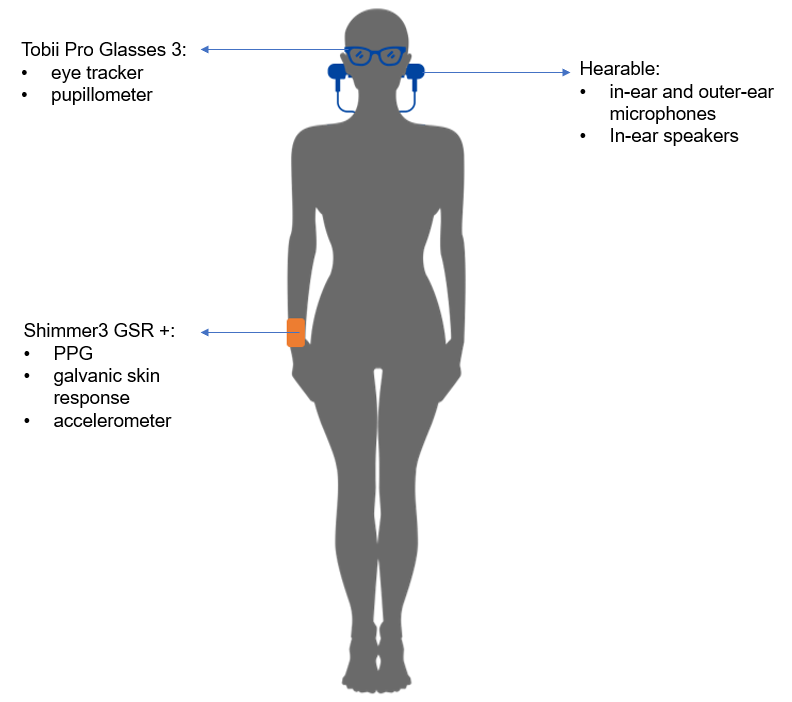

Internal audio sounds produced by the human body convey important information about a person's health. For example, teeth grinding can indicate anxiety, and an elevated heart rate may signal emotional agitation. Intra-aural wearable devices, like hearables, are effective tools for capturing these internal sounds. These devices amplify low-frequency vibrations, enhancing internal audio signals. Alzheimer's disease, which impairs cognitive and motor abilities, is linked to changes in the central auditory system. Central auditory tests, such as the Hearing in Noise Test (HINT) and Triple Digit Test (TDT), are related to cognitive functions like learning and memory. Using hearables to conduct these tests is non-invasive, sensitive, quick, and can be done by untrained individuals, making them ideal for early Alzheimer's detection, especially in developing countries. Analyzing internal audio signals with hearables could enable early prediction of Alzheimer's disease.

Student: Miriam Boutros

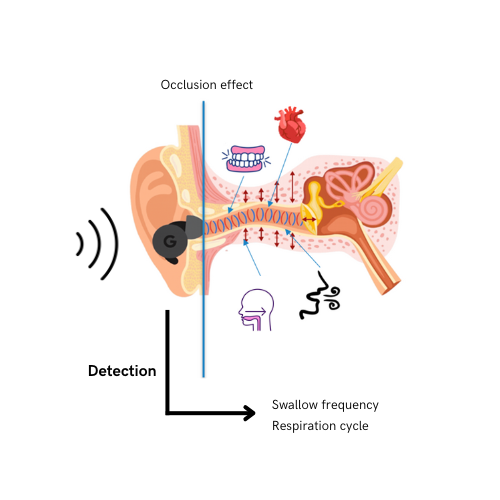

Detection of swallowing and breathing using an in-ear microphone

In health monitoring, using in-ear microphones can identify sound patterns indicating coordination issues between swallowing and breathing, crucial for conditions like sialorrhea for people with Parkinson's disease. By detecting the frequency of swallowing and the timing of the respiratory cycle, we can potentially verify the hypothesis that excessive drooling in people with Parkinson's disease is due to impaired swallowing rather than excessive saliva production. To achieve this, ultrasound serves as a control method to distinguish spontaneous and food swallowing from other activities to have a labeled dataset for a machine learning algorithm.

Student: Elyes Ben Cheikh

Integrating YAMNet and NMF for Bioacoustic Source Separation using In-ear Microphones

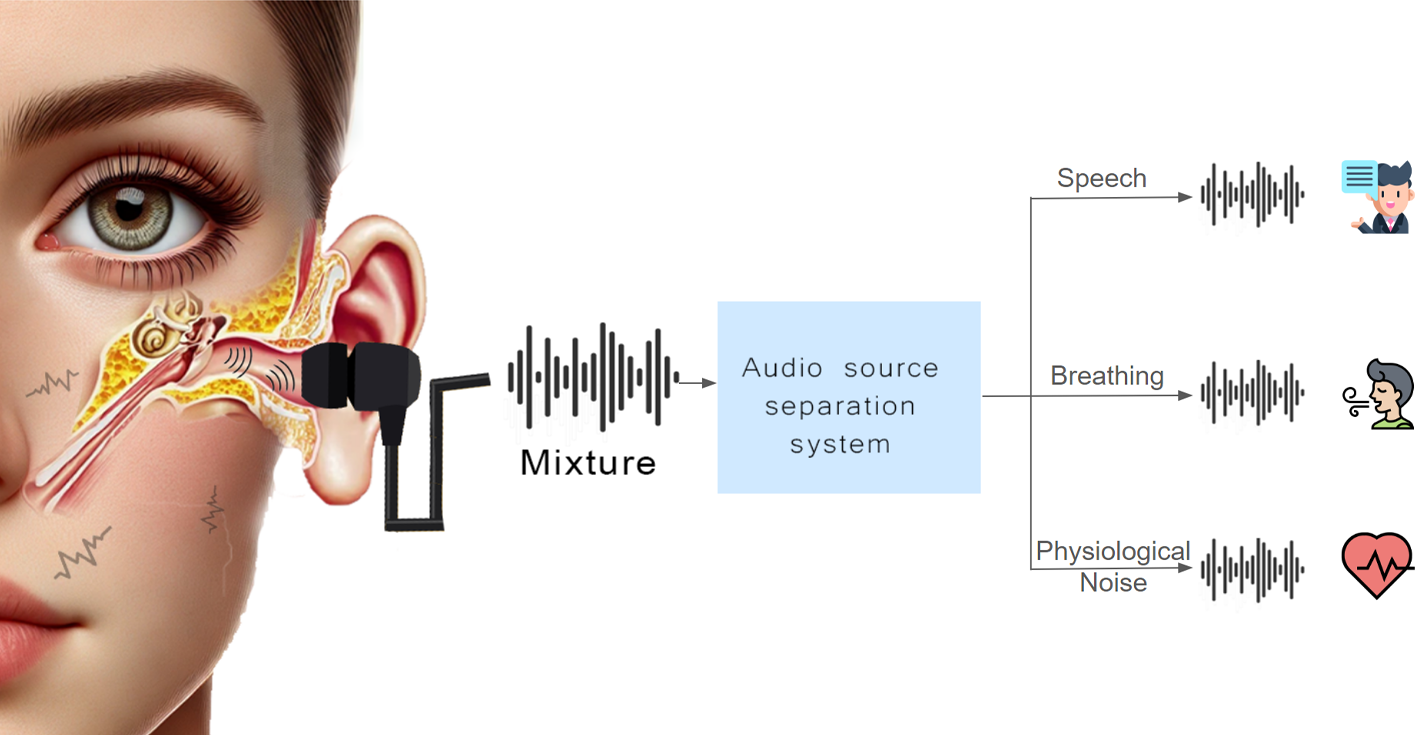

When capturing sounds from inside the ear with an in-ear microphone, signals such as speech, breathing, and physiological noise overlap both in time and frequency, complicating their use in health monitoring devices. This overlap poses significant challenges in separating individual signals, which is crucial for accurately interpreting bio-acoustic data. The objective of this research is to develop a source separation system capable of separating these overlapping signals, ultimately enhancing the precision and reliability of health insights from wearable devices, especially for monitoring key physiological events like respiration and heart rate.

Student: Yassine Mrabet

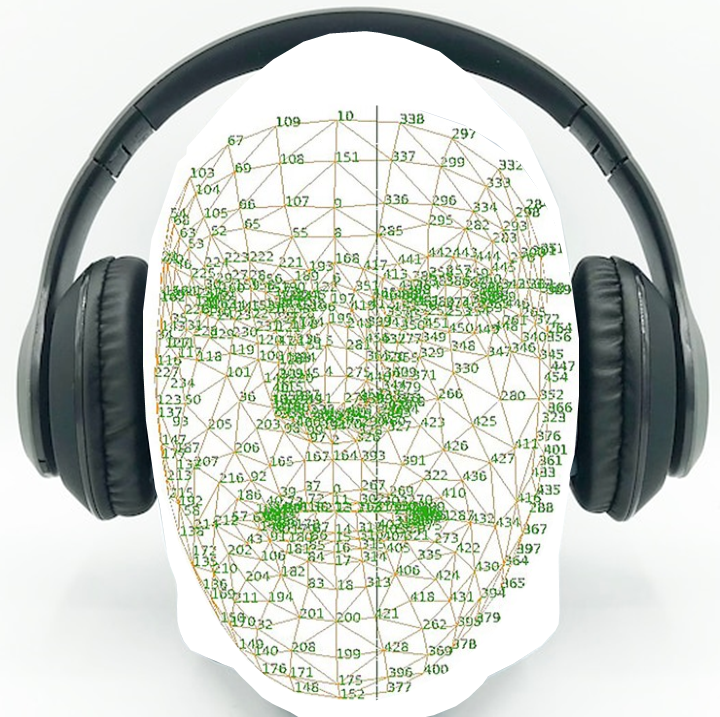

Facial Expression Analysis and Auditory Perception

This project aims to link subtle facial expressions to the sensory and affective dimensions of loudness. Using insights from pain research, we explore whether different facial expressions correlate with how pleasant or loud a sound is. We analyze reactions to auditory stimuli in a large video and behavior dataset, using computational linguistics, facial expression analysis, and machine learning. This research could improve methods to evaluate subjective perception objectively, benefiting clinical and industrial applications such as hearing aid calibration and sound environment evaluation for non-verbal subjects.

Student: Alessandro Braga